Podcast Interview – The Intersection Between Privacy, AI, and Cybersecurity

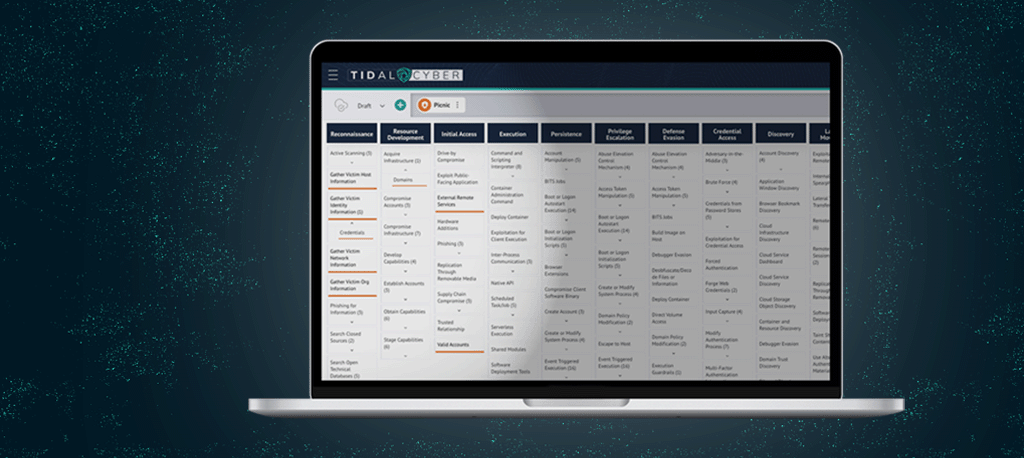

If you are a GRC professional or a Cybersecurity practitioner, you will find this episode of Picnic’s Podcast Interviews very timely and relevant. Matt Polak, CEO of Picnic, hosts a panel

Podcast Interview – The Intersection Between Privacy, AI, and Cybersecurity Read More