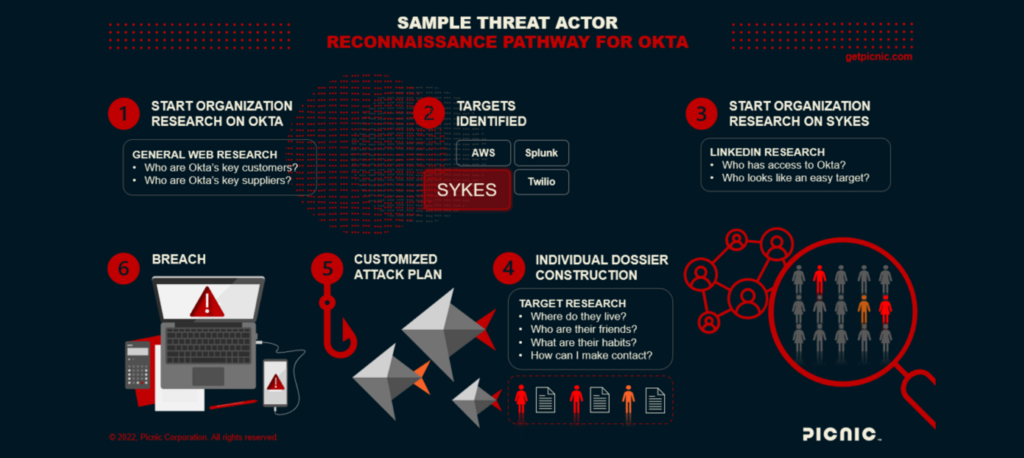

Picnic Corporation Unveils Game-Changing Human Risk API

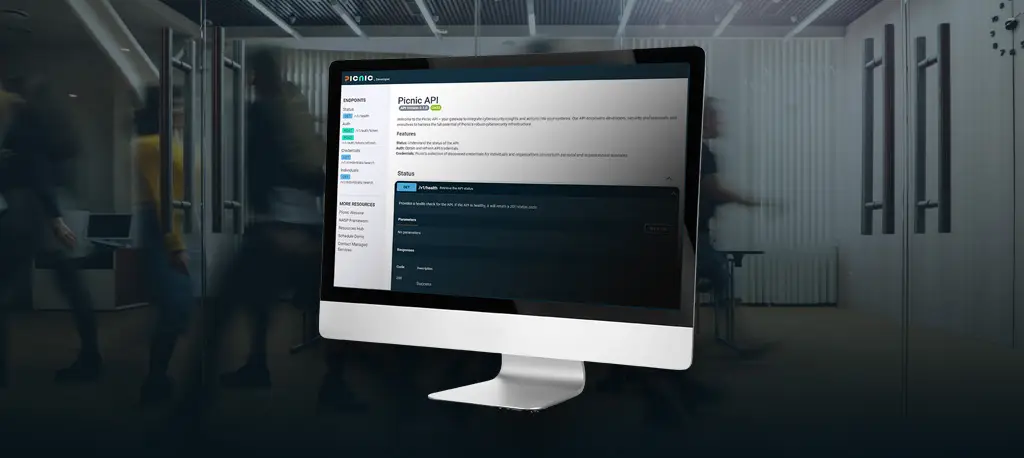

WASHINGTON, D.C., U.S., April 22, 2024 — Picnic Corporation, a pioneer in cybersecurity innovation, today announced the launch of its groundbreaking Human Risk API. This technology offers a unique solution

Picnic Corporation Unveils Game-Changing Human Risk API Read More