CoinsPaid July 2023 Social Engineering Attack

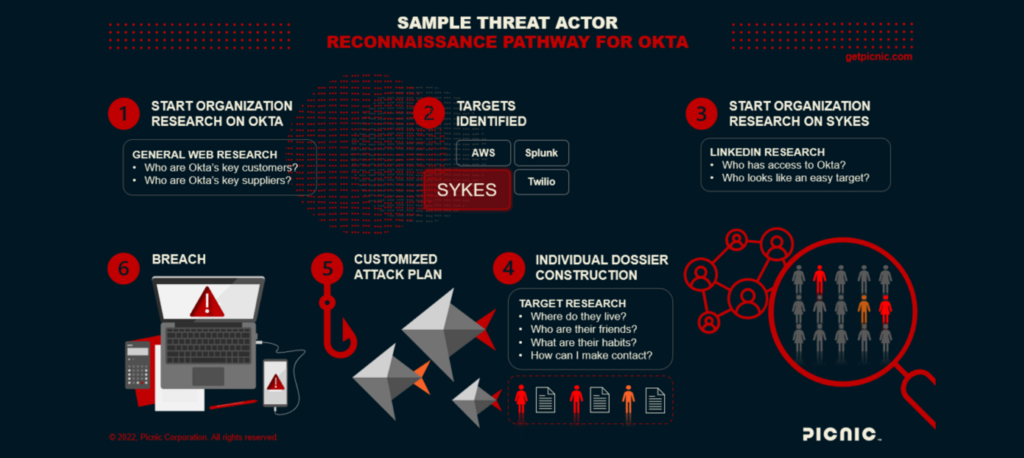

Download PDF Incident Name: CoinsPaid Social Engineering Attack Date of Report: August 7th, 2023 Date of Incident: July 22nd, 2023 Summary: CoinsPaid, one of the world’s largest cryptocurrency payment providers, suffered

CoinsPaid July 2023 Social Engineering Attack Read More