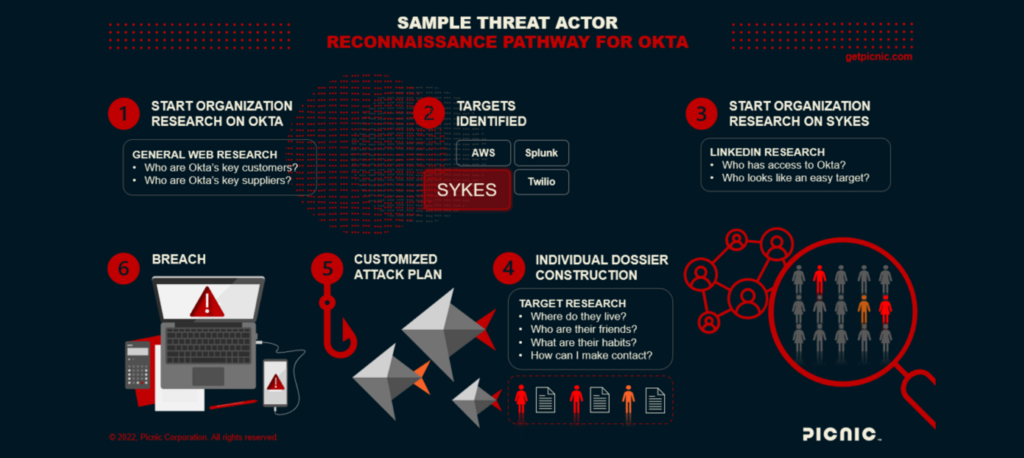

For Lapsus$ social engineers, the attack vector is dealer’s choice

By Matt Polak, CEO of Picnic Two weeks ago, at a closed meeting of cyber leaders focused on emerging threats, the group agreed that somewhere between “most” and “100%” of cyber

For Lapsus$ social engineers, the attack vector is dealer’s choice Read More